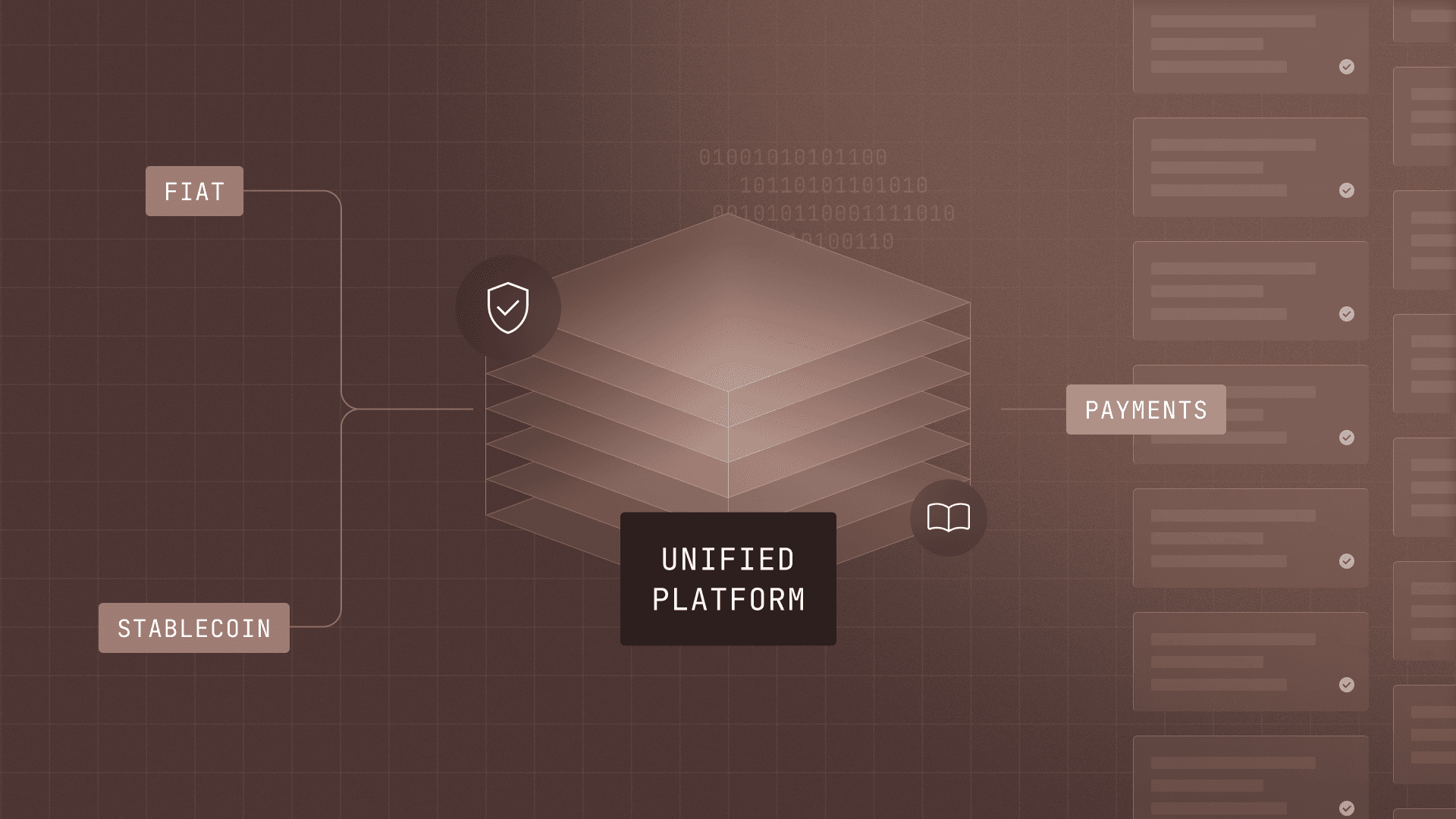

Introducing Modern Treasury Payments. Built to move money across fiat and stablecoins. Learn more →

How Well Do LLMs Know Double-Entry Accounting?

Explores how large language models perform double-entry accounting tasks, testing open-source and frontier models on charts of accounts and ledger transactions. Evaluates accuracy, balance, and accounting correctness across real-world scenarios.

In order to use a ledger database, you must first understand double-entry accounting. Learning this centuries-old tracking method is a tall order for our customers, even with our Accounting for Developers series and dedicated solutions architecture team. It took me almost a year of working with ledgers every day to feel like I had developed deep enough intuition to design a chart of accounts from scratch.

Among my surprises: How can a debit increase the balance on an account? Why is revenue a liability and not an asset? A payout seems to decrease both my bank account and my user liabilities–how can two decreases possibly balance to zero?

These common points of confusion got us thinking–can our customers use LLMs to create charts of accounts? Surely an accounting expert can prompt their way to getting a decent chart of accounts. But can the models help out beginners?

The short answer is: they can! And we have the code to prove it.

Here are the questions we set out to answer with some science:

- Can LLMs consistently generate charts of accounts and transactions given natural-language prompts?

- Are some LLMs better than others?

- What are the limits of the LLMs we have access to today?

- Can we build a process to quickly evaluate new LLMs?

The setup

In order to compare models in a consistent way, I built a command-line tool that takes in a model name and a YAML file of prompts, and outputs ledger accounts and transactions in a standardized format. Under the hood, the tool abstracts away the underlying models and SDKs into a common interface that outputs a Pydantic type:

This interface requires models to be capable of structured output. All recent models from major providers are capable of this natively, and even smaller open-source models can do it with a bit of prompting. You can see the details in the clients directory.

The test runner prompts the models in a pipeline:

- The model is asked to generate a chart of accounts for a business using natural language. Crucially, I included a direction to “create the minimum number of accounts to satisfy the requirements.” Some models, regardless of size or training method, are eager to add many accounts that aren’t directly related to the requirements outlined in the prompt.

- The result from (1) is parsed into a ChartOfAccounts model (a list of ledger accounts).

- Including the parsed ChartOfAccounts in the prompt and per-transaction instructions, the model is prompted to generate transactions.

- The result is parsed into a FundFlow model (a list of ledger transactions).

There are two prompts for each scenario, specified in a YAML format:

- Digital Wallet (simple): a digital wallet app with one user. Covers deposits and withdrawals.

- : adds the complexity of multiple users and a peer-to-peer transaction. The user that deposits money is different from the user that withdraws money.

- Payroll (simple): a payroll platform that has only one employer. Transactions should show the employer paying the platform for wages, and then the platform paying out employees.

- : adds the complexity of multiple employers each with their own employees.

They are designed to be short prompts that focus on the business use case, but have no double-entry accounting knowledge built-in. We’re testing the models, not building a state-of-the-art chart of accounts generator.

The rubric

Now that I had a way to pass the same prompt to multiple models and get back structured responses, I needed a way to evaluate each model’s performance. At first, I expected to write heuristic tests, such as asserting that a result should have only three accounts, and only one should be debit-normal. But I quickly realized that this would result in many false negatives. There are many valid answers to the scenarios posed in the prompts.

Entire companies have sprung up over the past three years to automatically evaluate model performance. I didn’t want to add that task to the scope of this experiment, so I graded each model’s output by hand. To make it fair, I used the same four criteria:

- Chart of accounts correct: does the generated chart of accounts track all important balances for the use case?

- Account normality correct: is the normal balance correct on each account? Accounts that track assets should be debit-normal; liabilities should be credit-normal.

- Transactions balanced: does every credit have a corresponding debit across all transactions?

- Entries correct: do the transactions have entries that model the flow of funds? Would the resulting balances on accounts make sense?

The results: open-source models

While building the command-line tool, I used open-source models running on my MacBook. These were chosen from the list of models supported by Ollama and were ones I had heard about and was interested in trying. Using open-source models helped keep the iteration loop fast. I didn’t have to juggle API keys or set up billing with model providers. The downside was that my laptop got very hot!

Overall, the open-source models I tried were a mixed bag but exceeded my expectations. They provide a great baseline to compare against the state-of-the-art models. Every model I tried was able to follow the pipeline well (use the generated chart of accounts in their transactions), and every model got at least some things correct.

OpenAI’s open-source GPT model performed the best, and also was the biggest model I could run on my laptop. Deepseek got some things right, but only the simple digital wallet example finished running. Gemma 3 was fast and could generate reasonable looking results, but mostly did not conform to double-entry accounting standards.

You can see all the model outputs here.

Digital Wallet (simple)

| Model | Chart of Accounts correct? | Account normality correct? | Transactions balanced? | Entries correct? |

|---|---|---|---|---|

gemma3 | ❌Generated too many accounts, some duplicates, irrelevant to the use case. | ❌User liability account was incorrect (should be credit-normal). Expenses were also incorrect (should be debit-normal). | ❌Debits did not equal credits on any transaction | ❌The amounts matched the prompt, but the direction was incorrect on some. Also included unnecessary zero-amount |

deepseek-r1-8b | ❌Correct accounts for revenue and assets, but no way to track user liabilities. | ✅Normality was correct for assets and revenue. | ❌Transactions were single-entry. | ❌The entries were correct on the asset account, but incorrect because there were no entries on the liability side. |

gpt-oss-20b | ✅Correct accounts for storing cash and user liabilities. Included accounts that weren’t asked for (revenue and profit), but they were correct. | ✅Normality was correct on all accounts | ✅Transactions were all correctly balanced. | ✅The entries respected account normality and were the correct amount. |

Digital Wallet (complex)

| Model | Chart of Accounts correct? | Account normality correct? | Transactions balanced? | Entries correct? |

|---|---|---|---|---|

gemma3 | ❌Too many accounts, and missing some user liability accounts | ❌Wrong normality for user liabilities and expenses | ❌Some transactions are balanced, but not all | ❌Entries are mostly incorrect on the transactions that impact both liabilities and equity. The transaction between users looks good, but has unnecessary 0 amount entries |

deepseek-r1-8b | ❌Timed out | ❌Timed out | ❌Timed out | ❌Timed out |

gpt-oss-20b | ✅Correct accounts, did not include any unnecessary accounts | ✅Account normality was correct on all accounts | ✅Transactions were all balanced correctly | ✅Entries were correct and respected account normality |

Payroll (simple)

| Model | Chart of Accounts correct? | Account normality correct? | Transactions balanced? | Entries correct? |

|---|---|---|---|---|

gemma3 | ❌Some accounts are correct, most aren’t. There are a bunch of unnecessary accounts as well. | ❌Some are correct, but many aren’t. For example, the receivable account should be debit-normal. | ✅Debits match credits on all transactions | ❌All transactions are mostly nonsensical |

deepseek-r1-8b | ❌Timed out | ❌Timed out | ❌Timed out | ❌Timed out |

gpt-oss-20b | ✅Generated the correct asset and liability accounts | ✅All accounts had correct normality (including the payroll expense account) | ❌Payout transaction is unbalanced | ❌Accruing wages is correct, but the payout transaction both debits and credits the cash account. |

Payroll (complex)

| Model | Chart of Accounts correct? | Account normality correct? | Transactions balanced? | Entries correct? |

|---|---|---|---|---|

gemma3 | ❌Too many accounts, and inconsistent point of view. Can’t decide whether it’s a payroll platform or if it’s paying its own employees. | ❌Frequently incorrect, e.g. employer receivables and employee liabilities | ✅Debits match credits on all transactions | ❌All transactions are mostly nonsensical |

deepseek-r1-8b | ❌Timed out | ❌Timed out | ❌Timed out | ❌Timed out |

gpt-oss-20b | ✅Correctly modeled the cash account, as well as liabilities for employers and employees | ✅All accounts had correct normality | ✅All transactions were balanced. The model added up per-employee liabilities correctly. | ❌Very close, but the model assumed all employees belonged to the first employer. |

The results: frontier models

Now that I had a working test harness, it was time to test the latest and greatest models. Overall, it’s clear that these models are better at accounting than a beginner.

That said, only Gemini 3 made no mistakes. GPT 5.2 performed better on “complex” queries compared to “simple” ones, indicating that more precise prompts will yield better results. Opus 4.5 made one fundamental error on the more complex payroll use case, but otherwise passed with flying colors.

You can see all the model outputs here.

Digital Wallet (simple)

| Model | Chart of Accounts correct? | Account normality correct? | Transactions balanced? | Entries correct? |

|---|---|---|---|---|

GPT-5.2 | ✅Includes a user liability and a platform assets account. Nothing for per-user liabilities, but that’s not specified in the prompt. | ✅All accounts correct | ✅Debits match credits on all transactions | ✅Entries were correct and respected account normality |

Gemini 3 | ✅Modeled assets and user liabilities. | ✅All accounts correct | ✅Debits match credits on all transactions | ✅Entries were correct and respected account normality |

Opus 4.5 | ✅Modeled cash and user liabilities. Also added fees and operating expenses (not in the prompt) | ✅All accounts correct | ✅Debits match credits on all transactions | ✅Entries were correct and respected account normality |

Digital Wallet (complex)

| Model | Chart of Accounts correct? | Account normality correct? | Transactions balanced? | Entries correct? |

|---|---|---|---|---|

GPT-5.2 | ✅Correctly modeled per-user liabilities and platform assets | ✅All accounts correct | ✅Debits match credits | ✅All transactions correct |

Gemini 3 | ✅Correctly modeled per-user liabilities and platform assets | ✅All accounts correct | ✅Debits match credits | ✅All transactions correct |

Opus 4.5 | ✅Correctly modeled per-user liabilities and platform assets | ✅All accounts correct | ✅Debits match credits | ✅All transactions correct |

Payroll (simple)

| Model | Chart of Accounts correct? | Account normality correct? | Transactions balanced? | Entries correct? |

|---|---|---|---|---|

GPT-5.2 | ✅Modeled cash, and employer and employee liabilities | ✅All accounts correct | ❌Debits do not match credits | ❌Direction wrong on some entries. Some entries cancel out other entries. Do not result in correct balances. |

Gemini 3 | ✅Modeled cash, and employer and employee liabilities | ✅All accounts correct | ✅Debits match credits | ✅All transactions correct |

Opus 4.5 | ✅Modeled cash, and employer and employee liabilities. Called the employee receivable account a ‘cash’ account, but otherwise everything was correct. | ✅All accounts correct | ✅Debits match credits | ✅All transactions correct |

Payroll (complex)

| Model | Chart of Accounts correct? | Account normality correct? | Transactions balanced? | Entries correct? |

|---|---|---|---|---|

GPT-5.2 | ✅Modeled assets (cash and receivables) correctly. Modeled the tree of employer and employee accounts. | ✅All accounts correct | ✅Debits match credits | ✅Modeled multiple legs correctly – accruing wages, employer funding, and then paying out. |

Gemini 3 | ✅Modeled cash correctly. Modeled the tree of employer and employee accounts. Did not model receivables, but this is a valid way to ledger. | ✅All accounts correct | ✅Debits match credits | ✅Modeled multiple legs correctly – employer pre-funding, allocating wages, and then paying out. |

Opus 4.5 | ✅Modeled cash correctly. Modeled the tree of employer and employee accounts. Did not model receivables, but this is a valid way to ledger. | ✅All accounts correct | ✅Debits match credits | ❌Treats the employer funding account like a receivable, when the chart of accounts has it as a liability. Means that balances would go negative. |

Conclusions

The results here are a very small sample size, and the model performance is a point-in-time. It’s likely that different prompts would result in new kinds of failures across models. All model output needs to be double-checked by a human to ensure accuracy. Models that will be available in the future may perform better or worse than the results today.

That said, the results above show there are a lot of nuances state-of-the-art LLMs get right consistently:

- Turning natural language into the precise language of double-entry accounting

- Following a chain of prompts: first generating a chart of accounts, and then transactions using those accounts

- The correct normal balance for different kinds of account

- A consistent point of view for the ledger (e.g. a fintech payroll platform, not a business paying its own employees)

- Balancing credits and debits

As more teams experiment with AI-assisted accounting or use LLMs to prototype financial logic, reliable ledger infrastructure becomes even more important. Models can help with exploration and learning, but correctness in the flow of funds still depends on systems that enforce invariants and safety at every step.

Matt McNierney serves as Engineering Manager for the Ledgers product at Modern Treasury, and is frequent contributor to Modern Treasury’s technical community. Prior to this role, Matt was an Engineer at Square. Matt holds a B.A. in Computer Science from Dartmouth College.