Join our next live session with Nacha on modern, programmable ACH on February 12th.Register here →

Deduplication at Scale

Here, we'll take a closer look at deduplication.

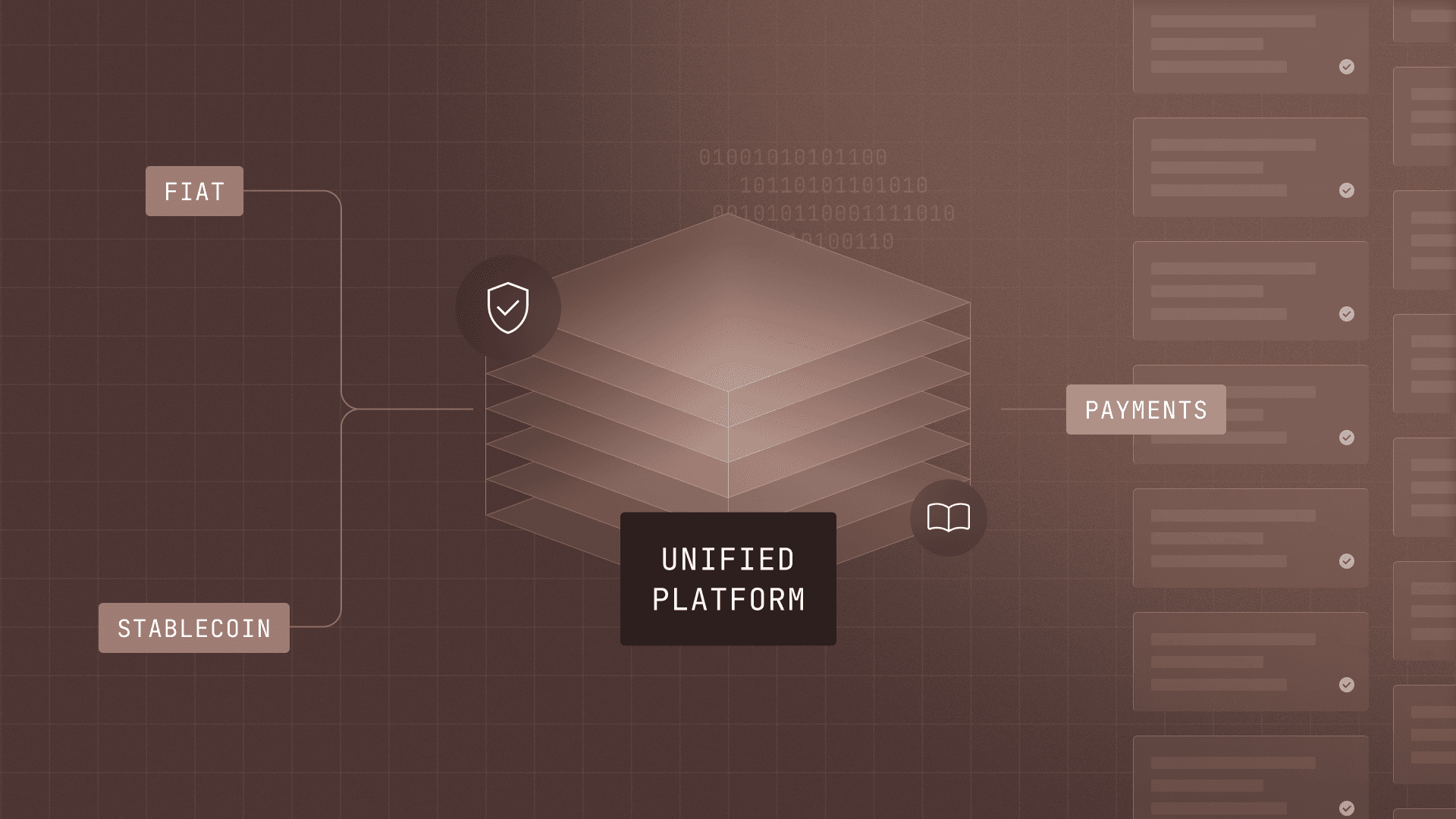

Every day at Modern Treasury we ingest our customers’ bank transactions. Part of our core product is to demystify and structure this data with speed and confidence, allowing our customers to track where their money is and act accordingly. This is of great value to our customers as transaction data is often messy, ambiguous, and hard to deduplicate.

Incorrectly processing duplicate bank data can lead to disastrous scenarios for our customers as they trust us as the single source of truth on their money movement. These scenarios pose serious financial and operational risks to customers, so we needed a highly resilient and scalable mechanism for blocking duplicates from entering our system.

As we grew, our original ingestion system started hitting its limits. So, before we reached a breaking point, we set out to re-architect the system to work at scale. The basic requirements were as follows:

- Never import a duplicate

- Adapt to any bank and payment rail (e.g. bai2, iso20022, API)

- Deduplicate 10,000 records/second

- Support ingesting millions of records a day

In this Journal, we’ll take a closer look at how we achieve this.

Diving Into Deduplication

To better understand the problem, it’s useful to see the data we are dealing with in these scenarios. Below is a short example of a bai2 file with two identical transactions.

The data is sparse, full of optional fields, and often lacking any consistent unique identifiers. This leads to natural questions such as:

- What happens if we receive two transactions in the same file that have the exact same data? Businesses often do receive duplicate payments from payers on purpose which can lack consistent unique identifiers. On top of this, we often receive repeated generic bank fees in this manner.

- What happens if we receive two transactions in different files that have the exact same data? It may be one of the cases described above, or there could be additional unique identifiers signifying a true duplicate.

- What happens if a bank has multiple formats for their unique payment identifiers depending on the payment type? We must be able to efficiently search and deduplicate for records across these multiple formats.

These are all scenarios that Modern Treasury experiences, so we needed to design a system that was resilient to these edge cases.

The Previous Solution

Our original solution relied on database lookups for fields that would make a transaction unique. If a record already existed with the same attributes, our system would drop it as a duplicate.

As we grew, we observed several key issues:

- Scalability. New payment rails and banks necessitated new strategies to effectively deduplicate. We had to maintain different indexes for keeping these lookups performant. As we scaled in payment volume, the increased write overhead and sub-optimal shared memory usage from these additional indexes affected overall database performance and slowed down our ingestion.

- Not Concurrency Tolerant. A classic database problem. We wanted the ability to horizontally scale our ingestion system by adding more bank pollers operating in parallel. When we were too aggressive with this strategy, we ran into pitfalls with concurrent pollers overlapping. In these cases, both pollers would see the same new bank data to ingest, but because neither of them committed their database transaction before executing the deduplication query above, we would end up with duplicate insertions.

- Brittle Framework. Bank data is full of edge cases, and this SQL-based approach was much too brittle when solving for them. We had multiple disjointed deduplication strategies with some using slower and complex

ILIKEqueries or had additional additional queries that further slowed the process and database down. These strategies were harder to implement and used the database ineffectively.

This compelled us to explore better solutions for this problem.

Exploring Solutions

Hashing

By generating a fingerprint (or hash) of the lookup fields and deduplicating based off of that, we could condense and simplify the deduplication logic across all banks and rails. This approach provides performance benefits by only necessitating one small index to deduplicate all records, rather than multiple larger composite indexes.

The fingerprint service can incorporate any popular hashing algorithm that is reasonably resistant to collisions such as MD-5, SHA-N, etc.

This reduces the write overhead of new records by reducing the number of indexes to update, and improves read performance by only needing to load a small, one column index that can fit into memory. On database reads during deduplication, this greatly reduces the amount of time we have to perform disk IO.

Database Constraint

It was an obvious design decision to represent the index for our fingerprint via a unique index.

Pushing uniqueness to the persistence layer allowed us to not only to increase our ingestion velocity by removing expensive search queries, but we also avoid a myriad of different concurrency edge cases by leveraging the ACID properties of a relational database.

If two or more threads are inserting a duplicate record simultaneously, our database guarantees that one will always win and the other will fail.

Unfortunately, this still wasn’t good enough to meet our requirements. Deduplicating and constraining on one potential hash was too brittle when considering the need to ingest true duplicate data in some cases and the overall state of the data, with inconsistent fields across imports.

Our Solution

The final solution we settled on was to use multiple potential hashes with a database constraint in a manner similar to Cuckoo hashing. If we run into a hash collision for records that aren’t actually duplicates, we generate a new secondary hash for one of the records to ensure they can both meet the constraint.

This gives us numerous additional benefits in our situation:

- Faster Computation. The first hash can be fast and avoid additional complex and slow sanitization some fields require. This is the common case, so most records avoid the slowest path.

- Collision Avoidance. Ingest duplicate data correctly without collisions with the database constraint.

- Granular Control. We can make more complex decisions about whether a record is duplicate or not, without relying on a single fingerprint/strategy.

With this new system, records being ingested take this path:

By implementing the constraint and hashing in this manner, we are able to effectively deduplicate records at a much higher scale by offloading the work to the database and short-circuiting in most cases while still providing robust correctness in cases of tricky, ambiguous imports.

The diagrams below help highlight a few cases where the original system failed, and where the new system provides additional database guarantees and more granular control.

By having multiple fingerprints generated only when needed, this system provides granular control and high velocity. The first fingerprint is generated from stable attributes where we don’t expect changes (i.e. amount) to provide an initial signal whether records are duplicate.

When performing additional attribute checks after a collision is encountered, we can begin to make risk-based decisions instead of binary decisions based on the record attributes. For example, two duplicate-looking $5 ACH bank fee records are likely real unique payments, whereas two duplicate high value wires are much more likely to be real duplicate records.

Additionally, we used MD5 hashes in a Postgres UUID column to keep the index small. MD5 collisions are non-trivial to trigger even maliciously so this works well.When inserting in parallel batches with a unique constraint, sort the records being inserted to prevent deadlocks.

Next Steps

Overall, this project ended up being a success by greatly speeding up our ingestion system while simultaneously providing stronger deduplication guarantees and cleaner interfaces for continuing to expand this system to new banks.

Prior to the release of the new system, particularly large file imports would time out completely due to the high database load generated by the previous system. With the new system in place, we can now quickly and confidently deduplicate those files, allowing our engineers to chase down other bottlenecks.

If you want to hear more about how engineering operates at Modern Treasury, reach out to us or check out our open positions.