Join our next live session with Nacha on modern, programmable ACH on February 12th.Register here →

Floats Don’t Work For Storing Cents: Why Modern Treasury Uses Integers Instead

Using IEEE-754 Floats for fractional currency might seem like the most intuitive solution, but they can cause traps that can be avoided with variants of precise Integers.

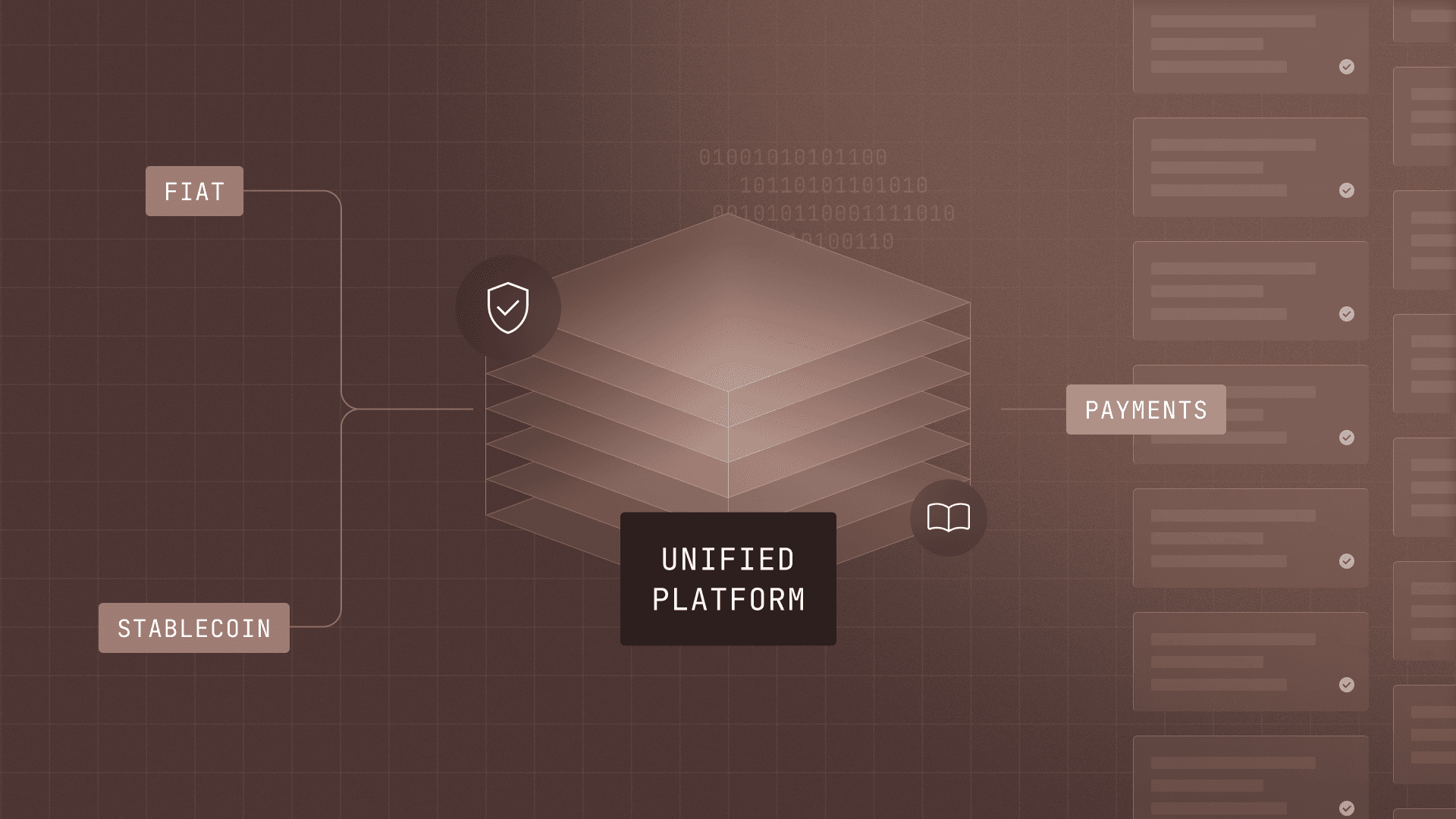

At Modern Treasury, we track, ledger, and facilitate the transmission of money with precision at scale. Building robust, currency-agnostic financial systems requires careful thought to how we represent monetary amounts in account balances and financial transactions, particularly when it comes to accounting for fractional parts of money like cents or pence.

In an era of prices ending in $.99 and digital payments allowing additional price calculations that are not convenient whole numbers, it’s important to store fractional monetary amounts correctly to maintain accurate balances.

Intuitively, this might seem easy enough to solve: nearly every computer system and programming language has built-in support for fractional numbers through IEEE-754 floats. But this approach introduces subtle and unavoidable errors. Fortunately, they can be avoided by using fixed precision types like Integers instead.

An Intro to Floating Point

IEEE-754 floating point datatypes are common in computing, and used almost universally to store real numbers with fractional parts. They take their name from allowing the decimal point of a number to "float," which allows the data to support an enormous range of numbers, while being economical with limited space available.

Floating Point Internals

Floats represent numbers using a format similar to the scientific notion of numbers.

Consider 1.23456, 1234.56, 0.0123456, and -123.456. The digits are the same, but the sizes of numbers are different. To express these values as IEEE-754 floats, we split them into two parts: the number (called "significand," "mantissa," or "fraction") and the order of magnitude (called "exponent").

For simplicity I’ll show this in decimal with powers of 10, and how computers store this in binary as [sign, exponent, significand]:

| Number | Split Out Order of Magniture | As Exponent of 10 | Representation |

|---|---|---|---|

1.23456 | 1.123456 × 1 | 1.23456 × 10⁰ | [+, 0, 1233456] |

1234.56 | 1.23456 × 1000 | 1.23456 × 10³ | [+, 3, 1233456] |

0.0123456 | 1.23456 × 0.01 | 1.23456 × 10⁻² | [+, -2, 1233456] |

-123.456 | -1.23456 × 100 | -1.23456 × 10² | [-, 2, 1233456] |

- Float32 uses 24 bits for the significand (23 significant digits + 1 sign) and 8 bits for the exponent

- Float64 uses 53 bits for the significand (52 significant digits + 1 sign) and 11 bits for the exponent

This limits how precisely values can be stored, particularly for numbers requiring many digits.

The Subtle Traps of Floats

Note: The programming examples here use Ruby, which is the programming language used at Modern Treasury. These should be readable even if you have never used Ruby, and other languages should behave quite similarly.

Limited Significant Digits

One of the main strengths of floating point numbers is how they can represent enormously large numbers despite having a very small storage space by limiting the number of allowed significant digits for exponents. For example, a 32-bit float can hit magnitudes around 3.4 × 10³⁸ while a 32-bit integer only hits around 2.2 × 10¹⁰ (max 22147483647, to be precise). A key difference is that floats achieve this by representing approximate values while integer data types represent integers precisely. Approximation is ideal for scientific computing and engineering applications, but complicated for finance.

Consider trying to represent $25,474,937.47 within 32 bits. A 32-bit float has to approximate this as $25,474,936.32—which is off by $1.15!

Base 2 vs Base 10 Approximation

Even when there’s no issue with limited significant digits when numbers are relatively small, most simple monetary values can’t be stored exactly using IEEE-754 floats. This is because the decimal numbers in the financial world are base 10, but computers deal with binary in base 2. This means all the floating point approximations are combining numbers in powers of 2.

For example, to store $2.78 as a floating point number, we decompose it into powers of two (something like 2 + 0.5 + 0.25 + 2 + 0.5 + 0.25 + 2⁻⁶ + 2⁻⁷ + 2⁻⁸ + 2⁻⁹ + 2⁻¹⁰…), until we get to the closest representation: 2.7799999713897705078125.

This is really close, but clearly not exactly equal to 2.78. This example isn’t particularly unique; almost all typical numbers in finance can’t be represented precisely unless they happen to be powers of 2 such as:

$0.5 = 2⁻¹$16 = 2⁴$24.25 = 16 + 8 + 0.25 = 2⁴ + 2³ + 2⁻²

This means using IEEE-754 floats to represent money therefore demands recurring workarounds like rounding or checking whether a value meets error tolerance.

Consider a hypothetical situation where a user is entering a Microdeposit amount to verify they own their bank account:

What went wrong? We tried to compare 0.17953715949733673 to 0.18, which, while close, aren’t the same thing. There are a few possible fixes:

It’s easy to see how this starts increasing the complexity of the code.

Different Rounding Schemes

Standardizing on a rounding approach isn’t enough, we must also be careful about which rounding schemes we use. The IEEE-754 define five different types of rounding that you must explicitly account for, and many implementations require a bonus one to consider:

1. Round towards zero (Truncations)

2. Round towards positive infinity (Ceiling)

3. Round towards negative infinity (Floor)

4. Round to nearest, ties to even (Banker’s/Gaussian rounding)

5. Round to nearest, ties away from zero (e.g., when exactly halfway, around away from zero)

6. Round to the nearest, ties towards zero (e.g., when exactly halfway, round towards zero)

This isn’t part of the IEEE five methods, but many languages like Ruby implement it as a bonus.

Another thing we see above is behavior that is the same for positive numbers, but differs for negative numbers.

Failing to take these behaviors into account can have disastrous consequences. The scary thing is that they’re not anomalous and not new: in Slate’s The deadly consequences of rounding errors, these errors led to lost stock value, changed election results, incorrect missile strikes. These rounding errors are even a major plot point in movies like Office Space.

Note: Rounding errors are not unique to floats; in some use cases, integer based data formats may also need rounding logic if working with different precisions. We don’t need to account for this with Modern Treasury, but your case may vary.

Rounding Default Mode Varies Across Implementations

Given the various ways that rounding modes can create confusion, it’s tempting to rely on platform-default rounding behavior instead of being explicit. Most implementations will default to Banker’s routing, which works until you run into rare cases where the defaults vary.

For example:

- Ruby (half away from zero)

- Python (half even)

- JavaScript (half towards positive infinity)

Today, it’s common to build web applications with a JavaScript frontend and a different backend language, so sticking to the language’s default may not be practical. At Modern Treasury, we use a JavaScript frontend with a Ruby backend, for example.

You might think that if there’s inconsistent behavior across languages, it should be fine if you use the same language throughout, right? Not necessarily. Consider when Python switched the default behavior between versions 2 and 3, a change hidden away in “‘Miscellaneous Other Changes.”

- Python2 (half away from zero)

- Python3 (half even)

Due to various breaking changes between Python 2 and 3, both are still quite extensively used today. Python is not alone here; the Ruby 2.4.0 pre-release also introduced a change to rounding behavior that caused major issues almost a decade ago and immediately got reverted.

Now you might think, okay, if we use the same language and same version, we should still get the same rounding behavior, right? Again, not necessarily. Consider:

- Ruby’s round (half away from zero)

- Versus Ruby's sprintf (half even) which is based on C’s formatted strings

To deal with these differences, the only reliable scheme is being explicit about the rounding mode across all function calls in every environment.

Binary Rounding Versus Floating Point Rounding

Even same language, mode, and consistent rounding behavior doesn't always work. Consider half even:

The computer is computing things correctly, but binary floating representation defies our intuition, since we’re trying to think in decimal.

Two Different Zeros

At Modern Treasury, we send lots of zero-value payments that represent special instructions like . If we were to use IEEE-754 to represent the amount of these payments, we risk running into yet another subtle quirk: two distinct representations of zero (positive zero and negative zero).

The specification defines them as equal, so you’re unlikely to run into serious problems, but their binary representation is different when doing comparisons. So in some cases the two zeros don't give equal results:

As long as you’re careful with the type of equality conversion, this one is avoidable, but it’s definitely a subtle trap to keep in mind.

Mathematical Operations Can Cascade Errors

With floating point math, when numbers are rounded and the order of operations can create subtle differences. Worse, these errors only happen in a small fraction of cases so it’s easy for it to slip by unnoticed, but even one error can cascade into large financial systems not fully adding up. Consider two different methods of adding up to a total when using the default Banker’s rounding that leads to a cent difference:

With more complex calculations, larger numbers, or larger volumes of transactions, these differences can snowball. Standard arithmetic operations like addition, subtraction, multiplication, and division can create cascading problems depending on the order of operations, depending on the exact result you want. It’ll likely be very close to the answer, which is often okay for scientific or graphics use, but financial systems demand exact results.

Learning More About Floats

If floats still make sense for your use case, make sure to have a good understanding of all the possible nuances. Check out these links to learn more.

- Understanding different IEEE -754 rounding modes

- Understanding various float calculation errors

- Understanding nuance about floats with a list of theorems, concepts, proofs and implementation details

- IEEE-754 Floating Point Converter: Interactive tool to test the limitations of various float point numbers

Modern Treasury’s Solution: Integers

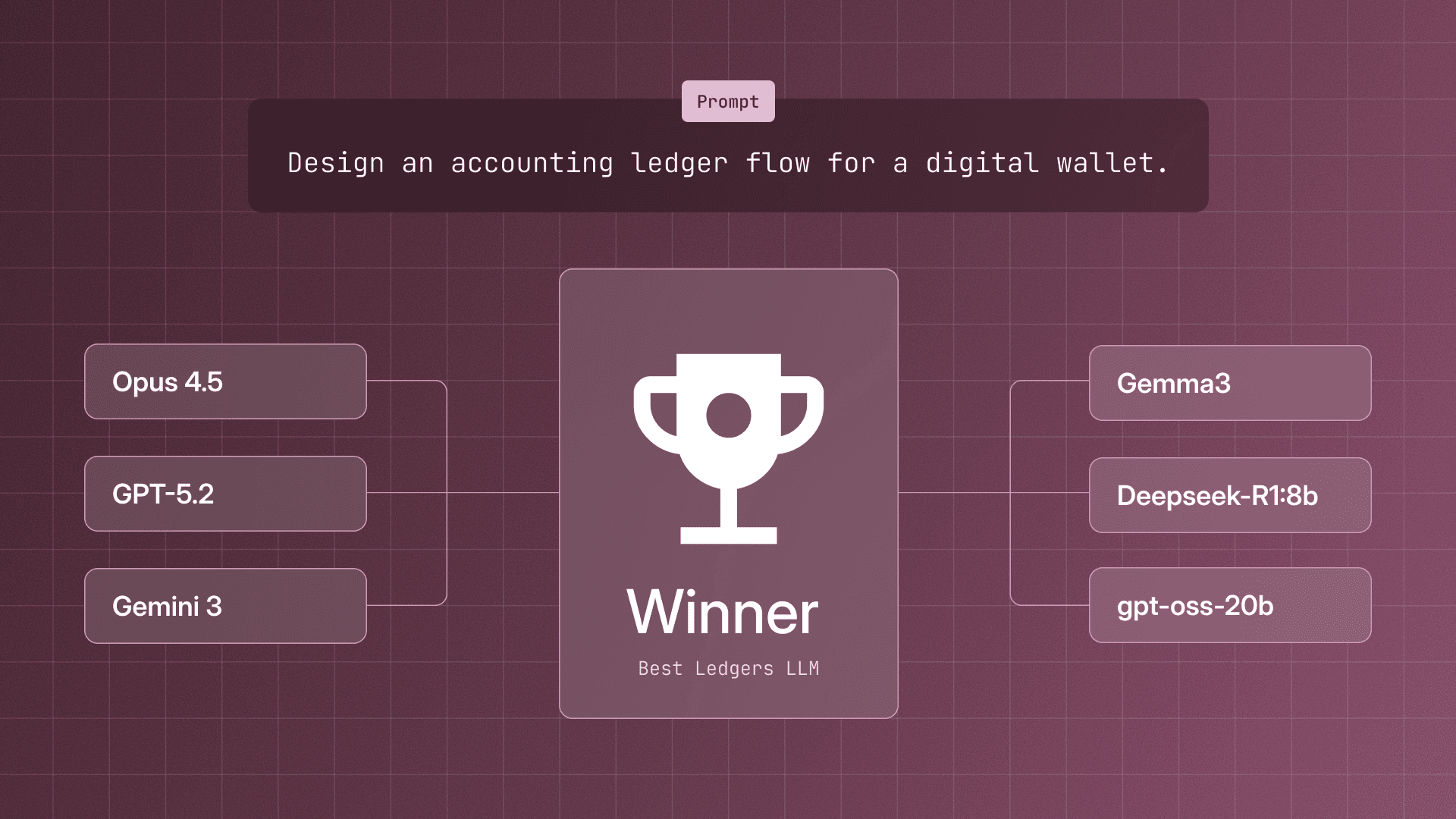

At Modern Treasury, we primarily use 64-bit Integers to represent money in our system (like when creating a payment order).

Using integers enables us to precisely represent every single amount possible within a range of -92,233,720,368,547,758.08 to 92,233,720,368,547,758.07, which is over 92 quadrillion dollars. With money movement, precision is everything. Also, since that range is more than eight hundred times the entire GDP of the world, we suspect we won’t need to represent anything larger (which would be a reason for using float). A single payment order is unlikely to break that threshold.

Additional benefits:

- Fast and hardware optimized. Nearly all standard operations can happen in a single cycle in recent CPUs, which can do billions of cycles per second. There’s many hardware level optimizations for int64 specifically and these parallelize very well. Even a simple smartphone processor can handle an enormous amount of int64 operations with ease. This fast underlying data structure means Modern Treasury can scale to huge amounts of financial volume.

- Storage efficiency. In modern computers, that’s the size of a single binary word. Picking efficient and fixed width data types allows us to store amounts efficiently in memory and databases with very fast read/write performance.

- Compatibility. It’s one of the primitive datatypes supported in practically every programming language and computer system. Having baseline support instead of a dependency keeps the codebase simpler and less imports create a more reliable system. Many of the systems with which we integrate also accept integer amounts, so we don't have to create custom logic to handle conversions.

- Readability. Standard arithmetic operations work out of the box, and don’t need special imports. Having more readable code lets us focus elsewhere on the more important business logic.

In our system, we store int64 values using PostgreSQL’s bigint, and read or operate on them in our backend as Ruby's Integer. We store all currency in the units of the fractional currency (meaning we store all US Dollars and Euros in cents). For example, $12.34 will be stored as the integer 1234. We don’t have to worry about rounding unless we're dealing with fractional amounts of the subcurrency like a portion of a single cent. Alongside the amount, we also store the currency to determine the number of decimal places using the standard.

If 64-bit integers aren’t big enough for your use cases, most mainstream programming languages have 128-bit integer support as well. This should cover almost all cases, but if this doesn’t work there’s many other alternatives that also use fixed and precise representation underneath.

Note: If you’re using JavaScript, keep in mind that the number type isn’t an integer but actually an IEEE-754 floating point number. As the language only supports floats, can find integers available through BigInt or third party libraries for JavaScript. Alternatively, try to use integers in a different backend language and have JavaScript only handle it on a display level, which is what we do at Modern Treasury.

Alternative Solutions

BigNumber

If you require integers of even larger range than allowed by int64, there are Integer types that can scale to any size. Typically these work by allocating more 64-bit blocks as needed to fit whatever number is required.

| Advantages | Disadvantages |

|---|---|

Closest Datatype to just working with integers | Harder to optimize lookups due to variable size |

Works for any size and precision of numbers (good for crypto or when very precise money amounts are needed) | Often slower than raw integers in operations due to lack of a fixed size |

In some languages like Ruby or Python, raw integers are automatically converted to arbitrary precision integers | Depending on the language, there might be slightly different syntax |

Available as:

- Ruby’s Integer - autoconverting

- Python’s int - autoconverting

- Go’s big.Int - explicit data type

- JavaScript’s BigInt - explicit data type

- Java’s BigInteger - explicit data type

- C#’s BigInteger - explicit data type

- For Databases like PostgreSQL there is numeric where an arbitrary fixed precision can be set

BigDecimal

BigDecimal is a higher level datatype that combines the exactness of Integers with moving precision just like Floats while building upon the variable size like BigNumber. These have a much smaller range than floats, but they make up for this with exactness in storage. BigDecimal is generally implemented as a hybrid datatype which stores one arbitrary size integer alongside another number which represents the precision.

| Advantages | Disadvantages |

|---|---|

Nice interface to do code operations in a single datafield | Harder to optimize lookups due to variable size |

Wide variety of payment systems and libraries that assume currency in BigDecimal such as Java’s Money or Ruby’s money gem library | Slower to accommodate the precise decimal structure for many complex cases |

Available as:

- Ruby’s BigDecimal

- Python’s decimal

- Go’s big.Float

- JavaScript has no native support but there are packages like js-big-decimal

- Java’s BigDecimal

- C# has no native support but there are packages are like ExtendedNumerics.BigDecimal

- Database support is varied, but since popular databases like PostgreSQL support numeric arbitrary precision integers, these can be supported easily and store a second number to represent the moving precision; PostgreSQL, the moving precision number can even be smallint, taking only 2 bytes, since any practical currency is unlikely to need more moving precision beyond

-32768to+32767

Strings

If you don’t need to do any operations on your data, storing it internally as a String also works. For example: 12.24 is literally stored as “12.24”—a 5 character string.

| Advantages | Disadvantages |

|---|---|

Avoids all the representation headaches since you’ll be storing data as-is | Needs much larger storage since each character is stored individually taking at least one byte (UTF-8 or ASCII) |

If you just want to pipe data to other systems without worrying about the complexities of representation, this would be a fine solution | Doing any arithmetic operation also requires converting it to a numeric type, which can be time intensive |

You also have to ensure that the data is sanitized, validated, and trimmed correctly since Strings can have non numeric data too |

Available as:

Strings exist in basically every language, system, and database so support is quite solid as well. In terms of databases like PostgreSQL, varchar with a fixed limit will work for storage.

Storing Whole and Fractional Separately

It’s also possible to store dollars and cents in a different hybrid data manner differently from how BigDecimal does it, with something like a pair tuple, struct, or map. Consider $12.24 represented as (12,24) or {dollar: 12, cent: 24}.

| Advantages | Disadvantages |

|---|---|

These hybrid data types can be quite readable in code | Complications around conversions and arithmetic operations which will need custom logic and often speed slowdowns due to handling two different numbers and their complications |

Useful for display and serialization logic that is often splitting data out to units and sub units | Around twice as large since we’re storing two numbers |

Talk To Us

At Modern Treasury, we think this deeply about your payment infrastructure so you don’t have to. If you’d rather not worry about the intricacies of numeric representations of your payment operations, talk to us.